AI and Surveillance: Balancing Security and Civil Liberties

The use of artificial intelligence (AI) in surveillance has become a focal point of global debate, particularly in light of its deployment during major events like the Paris Summer Olympics. While AI surveillance offers enhanced security capabilities, it also raises significant concerns about privacy and civil liberties. This article examines the implications of AI surveillance, the balance between security and privacy, and the potential for misuse of surveillance technologies.

AI Surveillance at the Paris Olympics

In preparation for the 2024 Summer Olympics, Paris implemented an AI-enabled surveillance system designed to bolster security. The system utilizes advanced algorithms to analyze footage from thousands of CCTV cameras, monitoring for potential threats such as abandoned bags, crowd disturbances, and other anomalies[1][3][5]. The French government has emphasized that the system does not employ facial recognition or collect biometric data, focusing instead on behavioral patterns[3][5].

Despite these assurances, the deployment of AI surveillance has sparked controversy. Critics argue that even without explicit biometric data collection, the technology could still infringe on privacy rights and violate European Union privacy laws, such as the General Data Protection Regulation (GDPR)[3][6]. Concerns have been raised about the potential for the system to remain in place post-Olympics, leading to a permanent increase in surveillance[5][7].

Privacy Concerns and Civil Liberties

AI surveillance poses several challenges to civil liberties:

- Right to Privacy: The widespread use of AI surveillance can lead to the erosion of privacy, as individuals are constantly monitored without their explicit consent[2][4].

- Freedom of Expression: The presence of surveillance can create a chilling effect, deterring individuals from expressing themselves freely due to fear of being monitored[2][4].

- Potential for Discrimination: AI systems may perpetuate biases, leading to discriminatory practices, particularly against marginalized communities[2][4].

Ethical and Legal Implications

The ethical implications of AI surveillance are profound. There is a risk of abuse of power, where surveillance technologies could be used for political purposes or to target specific groups[2]. The lack of transparency and accountability in the deployment of these systems further exacerbates these concerns[6][8].

Legally, the use of AI surveillance must navigate complex regulatory landscapes. While France has enacted temporary measures to allow AI surveillance during the Olympics, these measures have faced criticism for potentially setting a precedent for future surveillance practices[6][7]. The challenge lies in ensuring that security measures do not come at the expense of fundamental rights and freedoms.

Balancing Security and Privacy

Finding a balance between security and privacy is crucial. Governments and organizations must:

- Establish Clear Guidelines: Develop comprehensive regulations that define the scope and limitations of AI surveillance, ensuring compliance with privacy laws[2][6].

- Ensure Transparency: Implement transparent practices that allow for public scrutiny and accountability in the use of surveillance technologies[6][8].

- Promote Privacy-Enhancing Technologies: Invest in technologies that protect privacy, such as advanced encryption methods and anti-surveillance tools[8].

The use of AI for surveillance during events like the Paris Olympics highlights the ongoing tension between security needs and the protection of civil liberties. As AI technologies continue to evolve, it is imperative to address these challenges through robust legal frameworks, ethical considerations, and public engagement. By doing so, societies can harness the benefits of AI while safeguarding individual rights and freedoms.

Artificial Intelligence and Privacy: Navigating the EU AI Act

As artificial intelligence (AI) technologies continue to evolve, their intersection with data privacy has become a growing concern. The proposed EU AI Act aims to regulate AI technologies, addressing privacy implications and the ethical use of AI. This initiative reflects a global trend where governments are increasingly focusing on AI governance to protect individual rights while fostering innovation.

The EU AI Act: An Overview

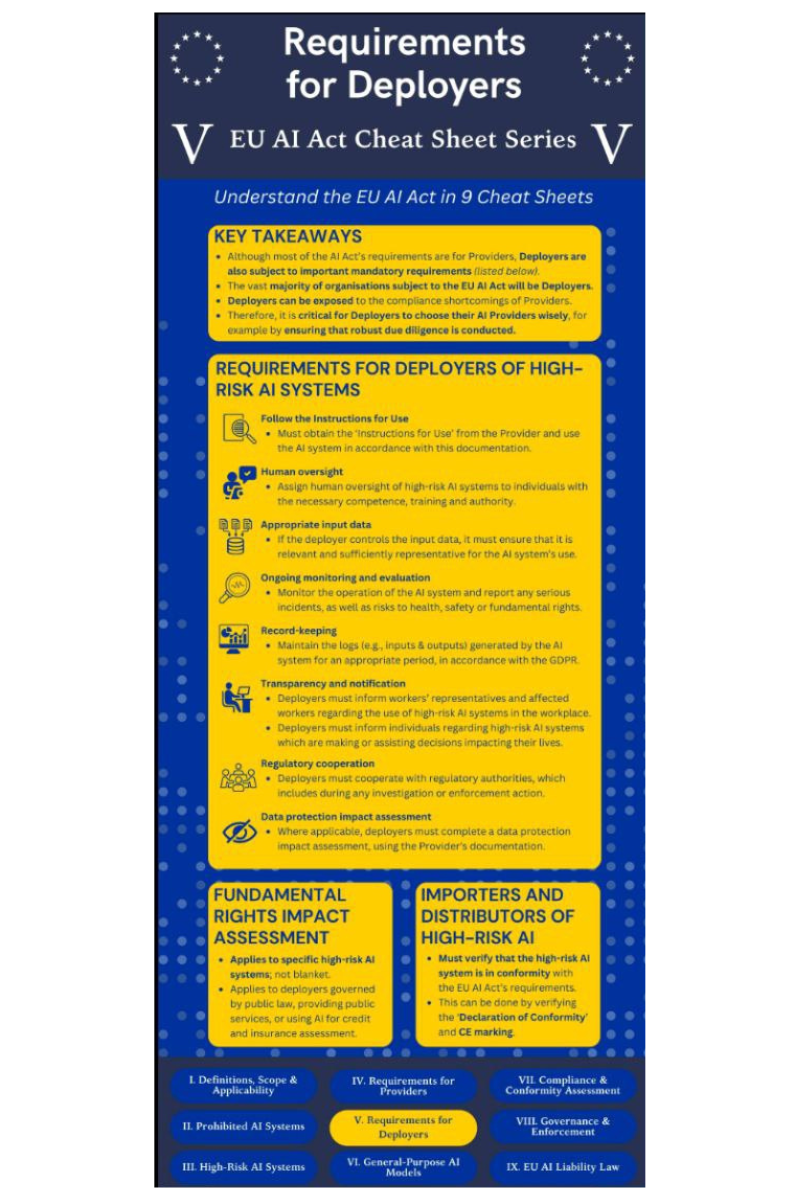

The EU AI Act represents a comprehensive effort to regulate AI technologies within the European Union. It seeks to ensure that AI systems are developed and used in a way that respects fundamental rights, including privacy. Key features of the Act include:

- Risk-Based Classification: AI systems are categorized based on their risk to fundamental rights, ranging from minimal risk to unacceptable risk. High-risk AI systems, such as those used in critical infrastructure or law enforcement, are subject to stringent requirements.

- Transparency and Accountability: The Act mandates transparency obligations for AI systems, requiring clear documentation and user information to ensure accountability.

- Prohibition of Harmful Practices: Certain AI practices, such as real-time biometric identification in public spaces by law enforcement, are banned due to their potential to infringe on privacy rights.

Privacy Implications and Ethical Considerations

The EU AI Act places a strong emphasis on privacy protection throughout the lifecycle of AI systems. It aligns with existing EU data protection laws, such as the General Data Protection Regulation (GDPR), to ensure comprehensive coverage of privacy rights. Key privacy-related aspects include:

- Data Governance: High-risk AI systems must adhere to robust data governance frameworks, ensuring data protection principles are upheld.

- Human Oversight: The Act emphasizes the importance of human oversight in AI decision-making processes, particularly for systems that impact individual rights.

Despite these measures, concerns remain about the potential for AI technologies to infringe on privacy rights. The Act's exemption for AI systems deployed for national security purposes has raised questions about the balance between security and privacy. Additionally, the limited transparency obligations for law enforcement use of AI highlight the need for ongoing scrutiny and oversight.

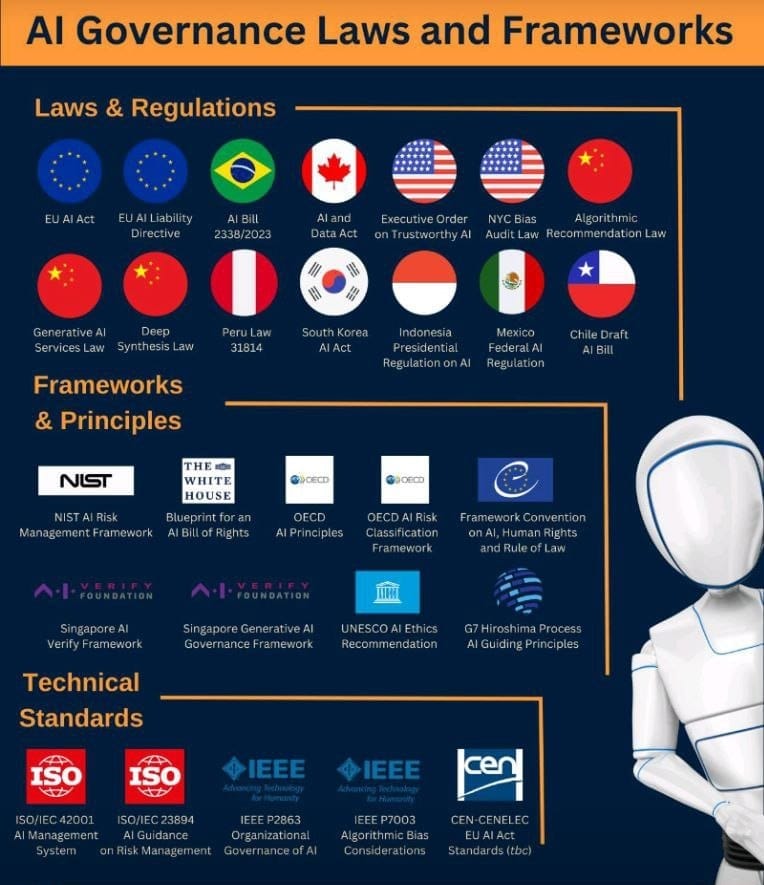

Global Trends in AI Governance

The EU AI Act is part of a broader global movement towards regulating AI technologies. Countries around the world are recognizing the need to address the ethical and privacy implications of AI. Key trends include:

- Ethical AI Development: Governments and organizations are increasingly focusing on ethical AI development, ensuring that AI systems are designed to respect human rights and avoid biases.

- International Collaboration: Cross-border collaboration is essential to establish global standards for AI governance, ensuring consistency and interoperability.

Challenges and Opportunities

While the EU AI Act sets a precedent for AI regulation, it also presents challenges for businesses and regulators:

- Compliance Complexity: Companies must navigate complex compliance requirements, balancing innovation with regulatory obligations.

- Innovation and Privacy: The Act encourages innovation by promoting privacy-preserving AI techniques, such as federated learning and differential privacy.

Conclusion

The EU AI Act represents a significant step forward in regulating AI technologies, addressing privacy concerns and ethical considerations. As AI continues to transform industries, it is crucial to establish robust governance frameworks that protect individual rights while enabling innovation. By prioritizing transparency, accountability, and ethical development, the EU AI Act aims to create a balanced approach to AI governance that can serve as a model for other regions.

Citations:

[1] https://cdt.org/insights/eu-ai-act-brief-pt-2-privacy-surveillance/

[2] https://www.spglobal.com/en/research-insights/special-reports/the-ai-governance-challenge

[3] https://trustarc.com/resource/2024-privacy-trends/

[4] https://www.movingforwardsmallbusiness.com/small-business-ai-governance-demystified/

[5] https://privacymatters.dlapiper.com/2024/04/europe-the-eu-ai-acts-relationship-with-data-protection-law-key-takeaways/

[6] https://www.directorsandboards.com/board-issues/ai/the-risks-of-fragmented-privacy-and-ai-regulations/

[7] https://iapp.org/resources/article/global-legislative-predictions/

[1] https://www.dw.com/en/paris-tests-ai-surveillance-ahead-of-olympics/a-68874609

[2] https://www.linkedin.com/pulse/ethics-ai-surveillance-balancing-security-privacy-aiethics-spair--sc2pe

[3] https://www.popsci.com/technology/paris-olympics-ai-surveillance/

[4] https://www.ohchr.org/sites/default/files/Documents/Issues/DigitalAge/Submissions/CSOs/ICNL.pdf

[5] https://www.wired.com/story/at-the-olympics-ai-algorithms-are-watching-you/

[6] https://www.lawfaremedia.org/article/algorithmic-surveillance-takes-the-stage-at-the-paris-olympics

[7] https://georgetownlawtechreview.org/playing-games-with-rights-a-case-against-ai-surveillance-at-the-2024-paris-olympics/GLTR-05-2024/

[8] https://thebulletin.org/2024/06/how-ai-surveillance-threatens-democracy-everywhere/