When Police Become Casualties of Censorship: How the UK's Online Safety Act Is Blocking Vital Public Communications

The Day Police Missing Person Alerts Became "Age-Restricted Content"

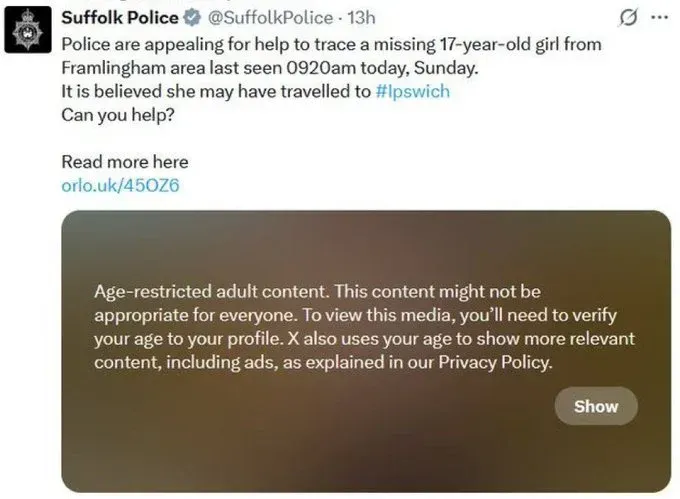

In a development that perfectly encapsulates the unintended consequences of the UK's sweeping censorship regime, police missing person alerts are now being blocked by social media platforms as "age-restricted content." What should be urgent public safety communications designed to help find vulnerable individuals are instead being hidden behind verification walls, potentially hampering search efforts and endangering lives.

The irony is stark: a law ostensibly designed to protect children is now preventing the public from seeing appeals to help find missing teenagers. This represents not just a technical glitch, but a fundamental breakdown in how democratic societies balance safety with free expression—and how automated censorship systems inevitably target the wrong content.

When Public Safety Meets Political Control

The UK's Online Safety Act, which came into full effect on July 25, 2025, was marketed as child protection legislation. The reality has proven far more expansive and troubling. Within hours of implementation, the law began restricting access to lawful content, including police communications, parliamentary speeches, and news footage—creating what critics describe as the UK's most comprehensive system of online censorship since the invention of the internet.

The Suffolk Police missing person alert highlighted in this investigation represents just one example of how this system is failing in practice. A routine appeal for help finding a 17-year-old girl from the Framlingham area—exactly the kind of urgent public information that social media was designed to disseminate quickly—is now hidden behind age verification requirements that most users cannot easily complete.

This isn't merely an administrative inconvenience. Missing person cases are time-sensitive. The first 48 hours are often critical, and social media has revolutionized how quickly information can spread across communities. When police departments increasingly rely on platforms like Facebook, X, and Instagram to crowdsource search efforts, blocking these communications directly undermines public safety.

The Automated Censorship Problem

The technical implementation of the Online Safety Act relies heavily on automated content moderation systems that lack the nuance to distinguish between legitimate police communications and potentially harmful content. These algorithms, designed to err on the side of caution, are casting an impossibly wide net.

Police footage showing arrests during protests has been restricted. Parliamentary speeches about serious criminal matters have been flagged as harmful content. Even missing person appeals—perhaps the most uncontroversial form of police communication—are being caught in these digital filters.

The platforms themselves appear to have little recourse. With fines of up to £18 million or 10% of global turnover for non-compliance, social media companies are implementing the most restrictive interpretations possible. The result is a system where automated algorithms make split-second decisions about what British citizens can see, with little human oversight or appeal process.

A Pattern of Expanding Control

The Online Safety Act didn't emerge in a vacuum. It represents the culmination of a years-long trend toward increased government control over online expression in the UK. The context is crucial for understanding how a law about child protection has become a tool for broader speech suppression.

Since 2016, over 3,000 people annually have been detained and questioned for online speech in the UK. Police have formed dedicated units to monitor social media for content deemed problematic. The Metropolitan Police have explicitly threatened to pursue citizens of other countries for posts that violate UK speech laws. These aren't isolated incidents but part of a systematic approach to controlling digital discourse.

The formation of elite police squads specifically to monitor "anti-migrant" social media posts demonstrates how quickly child protection measures can be repurposed for political enforcement. When law enforcement resources are dedicated to monitoring political speech rather than solving crimes, the priorities of the state become clear.

International Alarm and Diplomatic Tensions

The UK's approach to online censorship hasn't gone unnoticed internationally. The US State Department has described social media regulation in the UK and EU as "Orwellian." Vice President J.D. Vance has opposed the legislation as part of broader speech suppression across Europe. These aren't casual diplomatic observations but serious concerns about the direction of a key ally.

The tension extends beyond rhetoric. UK-US trade relationships are becoming strained over these issues, with technology companies caught between competing regulatory regimes. When democratic allies begin describing each other's speech policies in terms typically reserved for authoritarian regimes, it signals a fundamental shift in how freedom of expression is valued.

The Chilling Effect on Democratic Discourse

The most concerning aspect of this system isn't what it blocks today, but what it signals about acceptable discourse tomorrow. When police communications become subject to political censorship, when parliamentary speeches are flagged as harmful content, and when missing person alerts require age verification, the boundaries of acceptable speech have shifted dramatically.

This creates what experts call a "chilling effect"—where individuals and institutions self-censor rather than risk falling afoul of increasingly broad and unpredictable enforcement. Police departments may begin crafting their communications to avoid triggering automated filters. Politicians may moderate their language to prevent their speeches from being restricted. Citizens may think twice before sharing information that could be deemed problematic.

The result is a more constrained public discourse, where the most important conversations—about crime, about public safety, about government policy—happen in whispers rather than in the open forums that democracy requires.

Technical Failures and Democratic Deficits

The current system fails on multiple levels. Technically, it relies on imperfect automation that cannot distinguish context or intent. Procedurally, it provides little recourse for wrongly restricted content. Democratically, it places unelected technology companies in the position of determining what citizens can see and discuss.

Many UK users cannot even access the age verification systems that would theoretically restore their access to restricted content. Platforms like X have implemented these requirements without providing clear pathways for users to complete verification. The default state for many British internet users has become restriction, not freedom.

The surge in VPN usage—over 700% increases reported immediately after the law's implementation—demonstrates that citizens are actively seeking ways around these restrictions. When a significant portion of the population begins using tools typically associated with authoritarian regimes to access basic information, the democratic nature of the system comes into question.

The Precedent Problem

Perhaps most troubling is the precedent this establishes. Russia introduced similar "child safety" measures in 2012, which were quickly weaponized to block political opposition and LGBT content. Turkey's Social Media Law, ostensibly designed to protect "family values," became a tool for suppressing dissent and compelling platforms to hand over user data.

The UK appears to be following a familiar playbook: introduce sweeping online controls under the banner of child protection, then gradually expand their application to political speech. The fact that this expansion happened within days, not years, suggests either remarkable incompetence in drafting the legislation or a deliberate strategy to normalize broader censorship.

A Crisis of Priorities

When police missing person alerts become casualties of censorship, society's priorities have become dangerously misaligned. The same government that claims to be protecting children is simultaneously making it harder to find missing teenagers. The same systems designed to promote safety are hampering the very institutions responsible for public security.

This represents more than technical failure—it reveals a fundamental misunderstanding of how democratic societies should balance competing values. Safety and freedom aren't opposing forces that must be traded off against each other; they're complementary values that strengthen each other when properly balanced.

The Path Forward

The current situation is neither sustainable nor acceptable in a democratic society. Reform must address several key areas:

Technical Reform: Automated content moderation systems must be designed with appropriate safeguards for legitimate speech, particularly government communications serving public safety functions.

Procedural Reform: There must be clear, accessible appeals processes for wrongly restricted content, with human review for sensitive categories like police communications.

Scope Reform: Child protection measures should be narrowly tailored to actual child protection, not used as justification for broader speech controls.

Oversight Reform: Democratic oversight of these systems must be strengthened, with regular parliamentary review and public reporting on what content is being restricted and why.

International Coordination: The UK must work with democratic allies to develop approaches to online safety that don't undermine fundamental freedoms or create trade tensions.

Conclusion: The Cost of Control

The blocking of police missing person alerts represents a perfect microcosm of what's wrong with the UK's approach to online safety. In the name of protecting children, the system is endangering children. In the name of promoting safety, it's hampering the institutions responsible for safety. In the name of creating the "safest place in the world to be online," it's created one of the most restrictive.

The Suffolk Police appeal for help finding a missing 17-year-old should be seen by as many people as possible, as quickly as possible. Instead, it's hidden behind bureaucratic barriers that serve no legitimate purpose. This isn't child protection—it's the normalization of a surveillance state that treats every citizen as a potential threat to be monitored and controlled.

Democratic societies have thrived for centuries on the principle that more speech, not less, is the answer to society's problems. The UK's current path represents a fundamental rejection of that principle. The question now is whether British citizens will accept this new reality or demand the restoration of the freedoms that made their democracy worth defending in the first place.

When the police themselves become casualties of censorship, it's time to ask who this system is really designed to protect—and from what.